JTH

TSP Legend

- Reaction score

- 1,158

Project HAL-63

For years I’ve been collecting and analyzing market statistics. Watching how conditions shift, how signals break down, and how outcomes often depend more on context than the data itself. With recent advances in AI, I’ve been working to re-engineer how data is collected, structured, and interpreted in context with real-time events. AI plays a key role in this system. It doesn’t just add insight. It helps shape the framework, allowing me to configure diagnostic engines that can assign probabilities based on both recent signals and historical context stored in memory.

HAL-63 is a structured, context-based system designed to assign a probable outcome grounded in both historical and present conditions. A blend of AI-driven logic with rule-based structure and human-aligned interpretation, an assisted-thinking model that supports clarity without removing control.

Broadly, it operates across three functional layers:

1. Data Layer

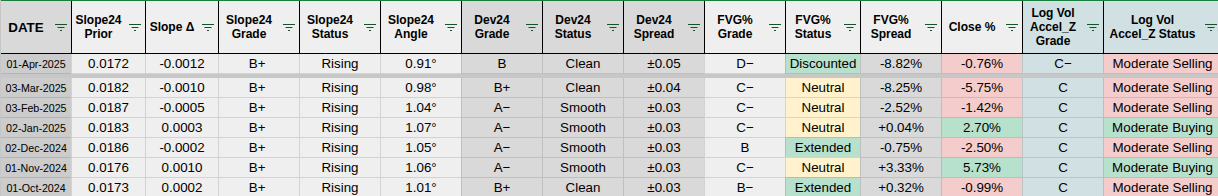

This is the system’s foundational input layer. It:- Holds structured inputs such as raw price, volume, and a core triad of separated indicators to prevent multicollinearity

- Incorporates macro context such as monetary policy shifts, liquidity regimes, economic cycles, and scheduled events

- Applies rule-based logic for tagging and filtering

- Functions as a cold standby, non-interruptive output engine. It provides a secondary baseline that can be compared against AI-driven diagnostic outputs for validation and fallback alignment. This supports a no single point of failure design by maintaining independence from the AI decision layer

2. Operations Layer

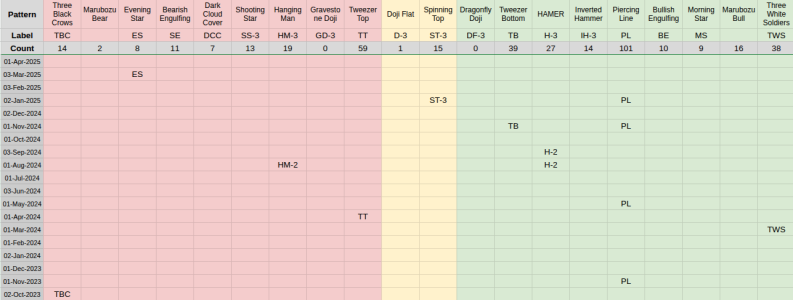

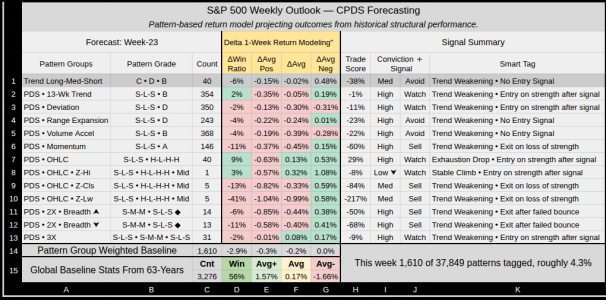

This is where real-time logic runs. The system:- Interprets current market conditions through multiple independent signal paths. This helps reduces signal noise by separating logic paths to prevent redundancy of Candlestick structure, Momentum behavior, Directional bias, & context tags.

- Assigns system state, forms probabilities, and defines forward actions

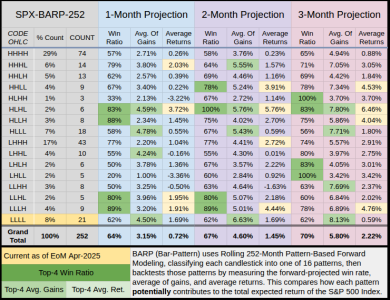

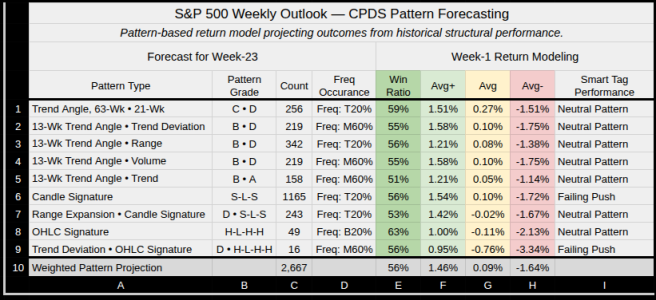

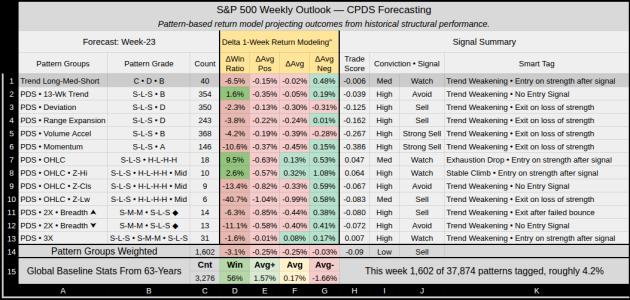

Contextual Pattern Detection System

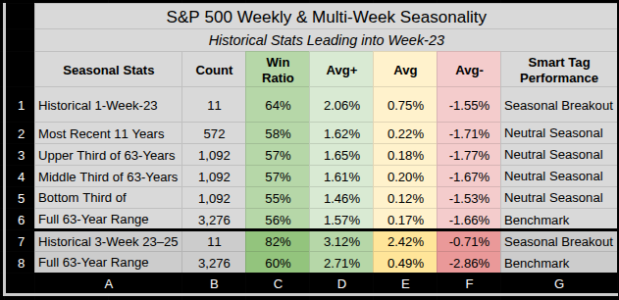

A hybrid engine within this layer that blends core indicator behavior with macro context.

- Frames patterns within liquidity, volatility, and cyclical timing

- Filters those formations that align with the current environment

- Rejects isolated patterns that lack structural or contextual backing

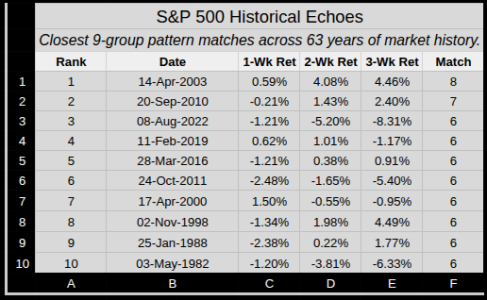

3. Echo Layer

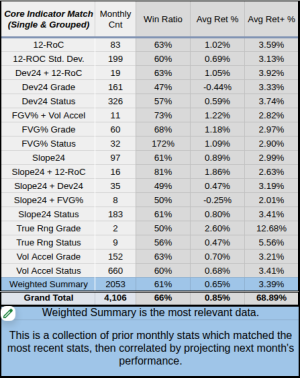

HAL-63’s long-term memory system. It:- Stores recognized patterns, outcomes, environment tags, and how the system and market responded

- Doesn’t predict, but remembers, compares, and references

- Anchors live signals to past outcomes to tighten future bias

- Reinforces patterns that consistently resolved under similar conditions

- Filters out noise by deprioritizing previously failed setups

- Supports adaptive weighting and a clearer signal hierarchy as history deepens

- Eases workload on live diagnostic engines by recalling relevant past setups

Currently Working

- Building the default template for the Contextual Pattern Detection System (it's melting my brain)

- Finalizing the HAL-63 AI component. This is a secure, structured AI-interface built to prioritize clarity and control. HAL-63 is designed with containment and reliability in mind, supporting directive-based interaction and role-specific behavior. In laments terms it's a basic program I've written consisting of an Enforcement Kernel, Global Directives, an Anchor Command Core, and a Persona Profile Matrix (the Diagnostic Engine Team).

If you have questions or comments, this thread is open to all, thanks...Jason

Last edited: